Canary deployments with just Azure DevOps. Well, with Azure DevOps and Azure Kubernetes Service. But that’s it, no additional software required.

Although there are plenty 3rd party solutions out there that address canary deployments, you might just get it done using “just” Azure DevOps. And in some cases, less is actually more! But to be fair, these other solutions provide a more holistic approach when it comes to deploying cloud native apps. If you are interested in those, these are the ones I know/ worked with:

- Spinnaker: https://www.spinnaker.io

- Harness: https://harness.io

- Istio: https://istio.io

If you just want to get some control on releasing new functionality in a modern cloud-native fashion, you can read on 🙂

Canary releases allow you to expose new code to a small group within the app’s “population”. It requires you to examine the canary carefully and look for unexpected behavior. If nothing hints at the app malfunctioning, you can decide to cut-over and roll out the new code to the entire group.

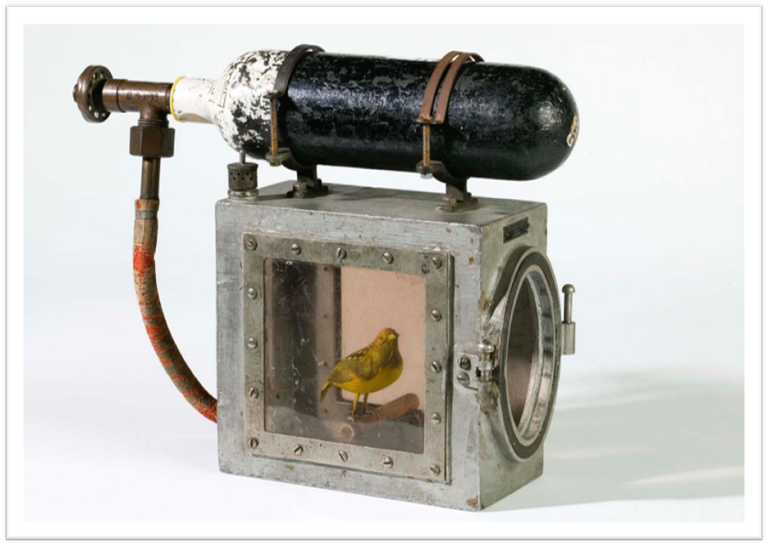

The backstory on the bird

For those interested, canary releases get their name from a technique that was used in the mining industry. Miners would carry a canary in a small cage to spot for mine-gas (mostly Methane). If the bird dropped, they would evacuate in fear of explosions and “air” the mine shafts before returning to their job.

Deployment scenario

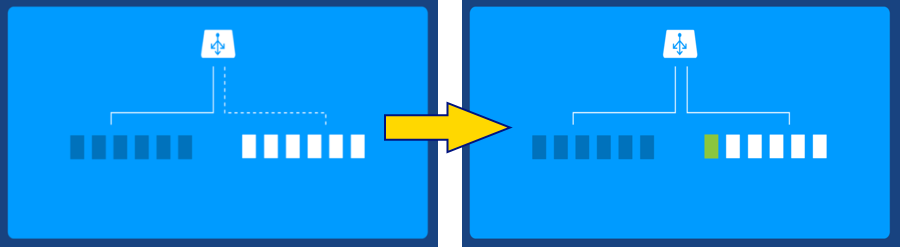

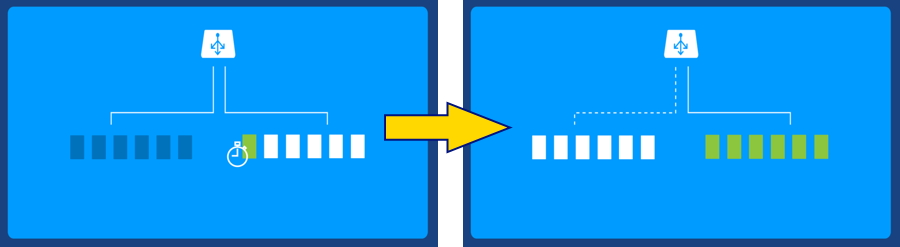

The concept is simple: expose new code to a small percentage of the users. If the new code doesn’t fail, expose all users. With deployments (and in our case docker images, pods and Kubernetes in mind) it could look something like this:

First, all users are on the “left”, then a canary is deployed:

After canary analyses, the group is switched-over:

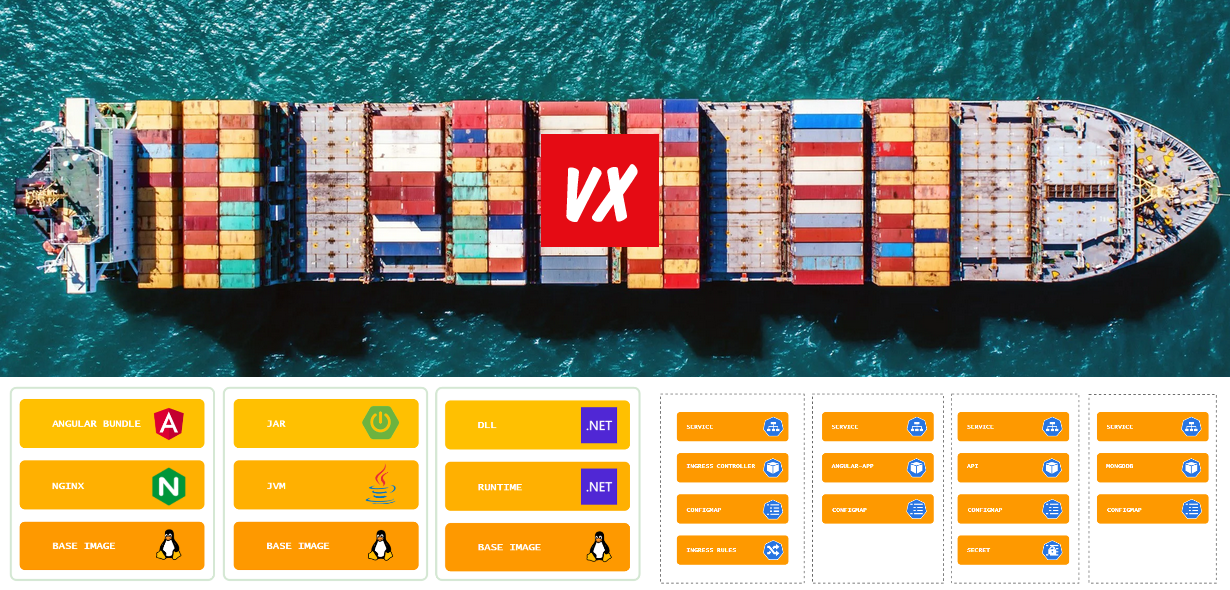

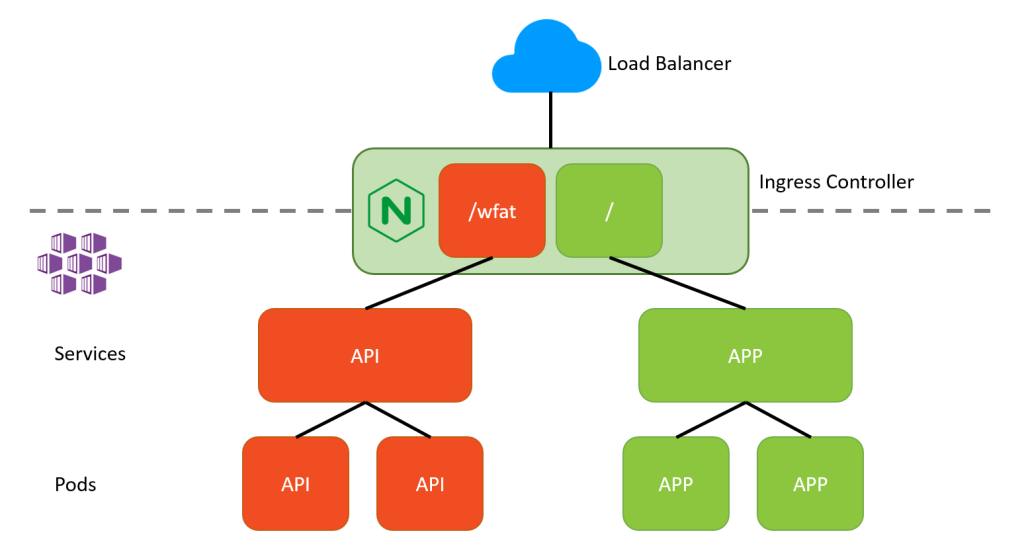

Our app will be deployed as a docker image on a managed Kubernetes cluster. So we need an Azure Container Registry and an Azure Kubernetes Service. These components don’t require any special setup, but for the deployment of our app we need to make sure we have them in place.

Within Kubernetes we usually end up with an Ingress type of setup. In the following example, we have both an app and a backend api running behind an Ingress Controller:

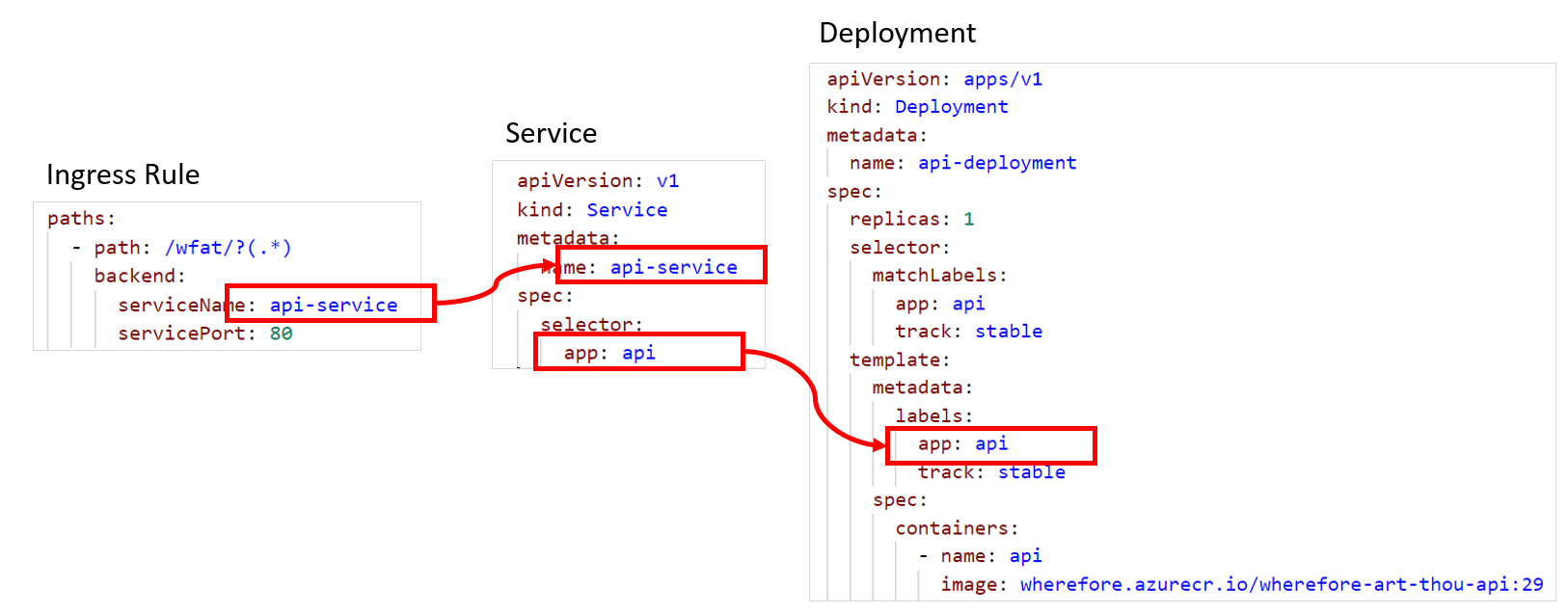

This setup ensures that incoming traffic for the App and Api gets routed through Kubernetes services to the pods running the containers. The way this works is that the Ingress rules match an incoming Request URI (a.k.a. path) on a Service name and the Service selects the pods based on the labels:

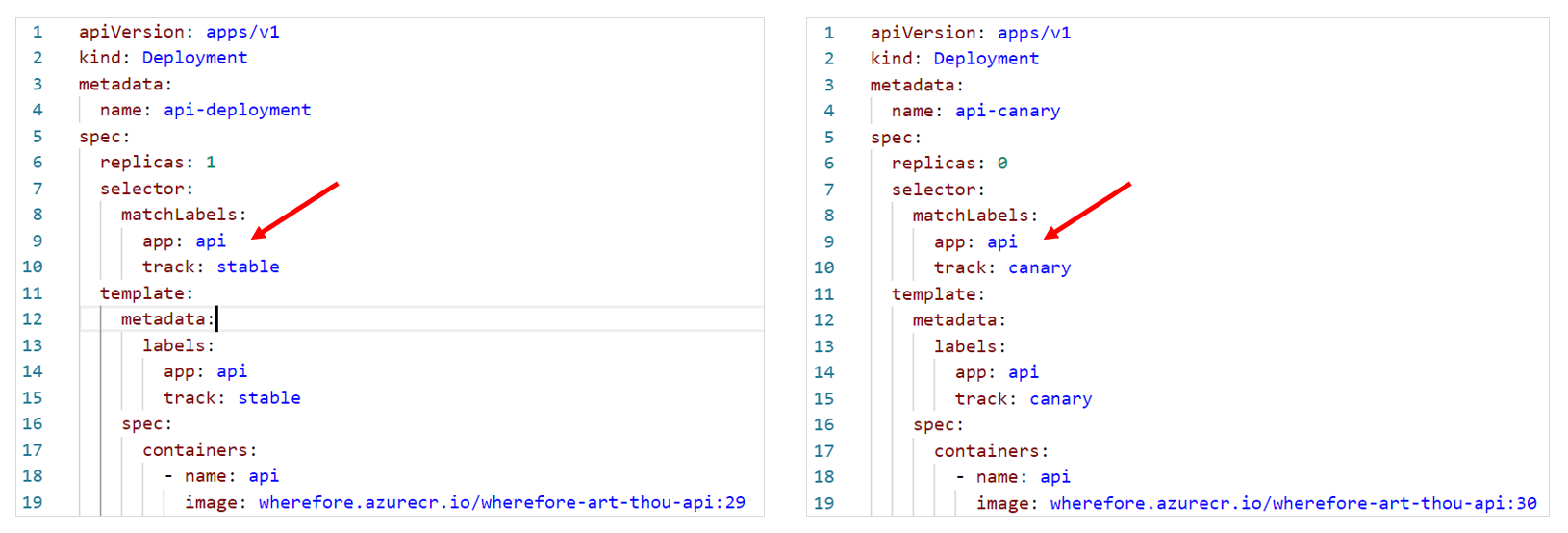

If we now create two deployments, one for the stabel release and one for the canary release, we can have Azure DevOps control both from a DevOps Release Pipeline. We start with one replica on the stable track (in real life there would probably be multiple replicas) and zero replicas on the canary track. Because both deployments have the “app=api” label, both are considered valid endpoints for the api-service.

Azure DevOps Release Pipeline

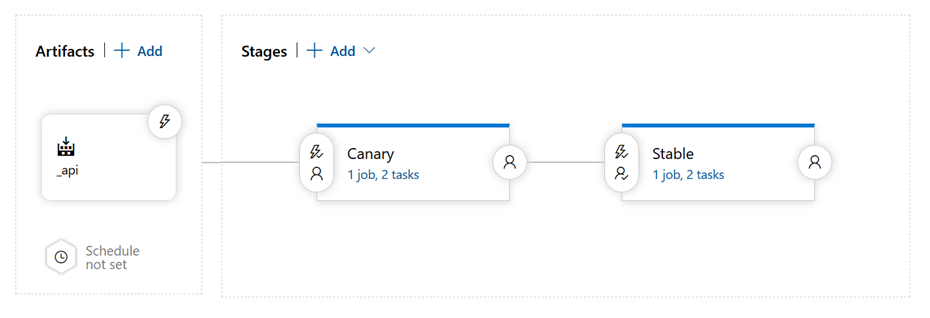

The multi-stage release pipeline can now control our Kubernetes deployments. We create 2 stages: Canary and Stable.

Both stages have 2 tasks: one for setting the correct image and one for scaling the deployment. These are standard Azure DevOps Kubernetes Tasks:

Canary stage:

- task: Kubernetes@0

displayName: 'Set Canary Image'

inputs:

kubernetesServiceConnection: 'wherefore-aks'

namespace: default

command: set

arguments: 'image deployments/api-canary api=wherefore.azurecr.io/wherefore-art-thou-api:$(Release.Artifacts._api.BuildId) --record'

- task: Kubernetes@0

displayName: 'Scale Canary 1'

inputs:

kubernetesServiceConnection: 'wherefore-aks'

namespace: default

command: scale

arguments: 'deployments/api-canary --replicas=1'

As you can see, we set the correct image for our api-canary deployment (in this example the artifact build ID is used for the image tag) and scale the canary deployment (api-canary) to 1 replica.

The Stable stage is just the opposite:

- task: Kubernetes@0

displayName: 'Set Deployment Image'

inputs:

kubernetesServiceConnection: 'wherefore-aks'

namespace: default

command: set

arguments: 'image deployments/api-deployment api=wherefore.azurecr.io/wherefore-art-thou-api:$(Release.Artifacts._api.BuildId) --record'

- task: Kubernetes@0

displayName: 'Scale Canary 0'

inputs:

kubernetesServiceConnection: 'wherefore-aks'

namespace: default

command: scale

arguments: 'deployments/api-canary --replicas=0'

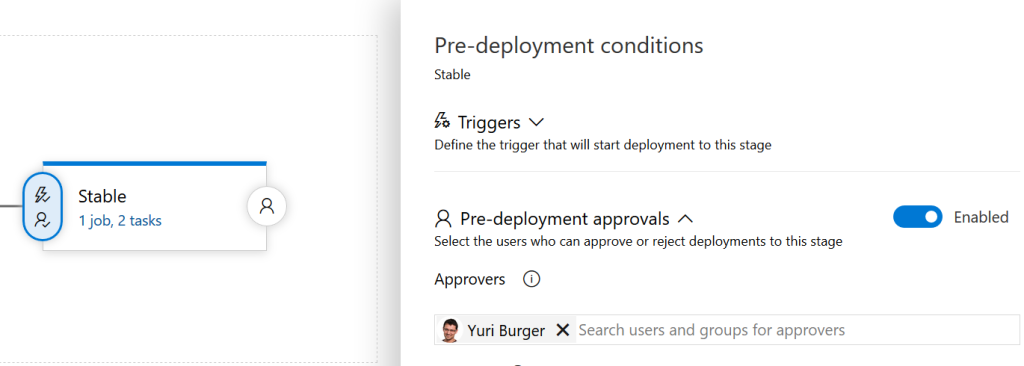

We set the correct image for our api-deployment and scale the canary deployment (api-canary) down to 0 replicas. One important missing piece is the pre-deployment approval for the Stable stage. If we do not enable this, the Canary deployment would be practically useless as the Release would immediately go through to the Stable stage.

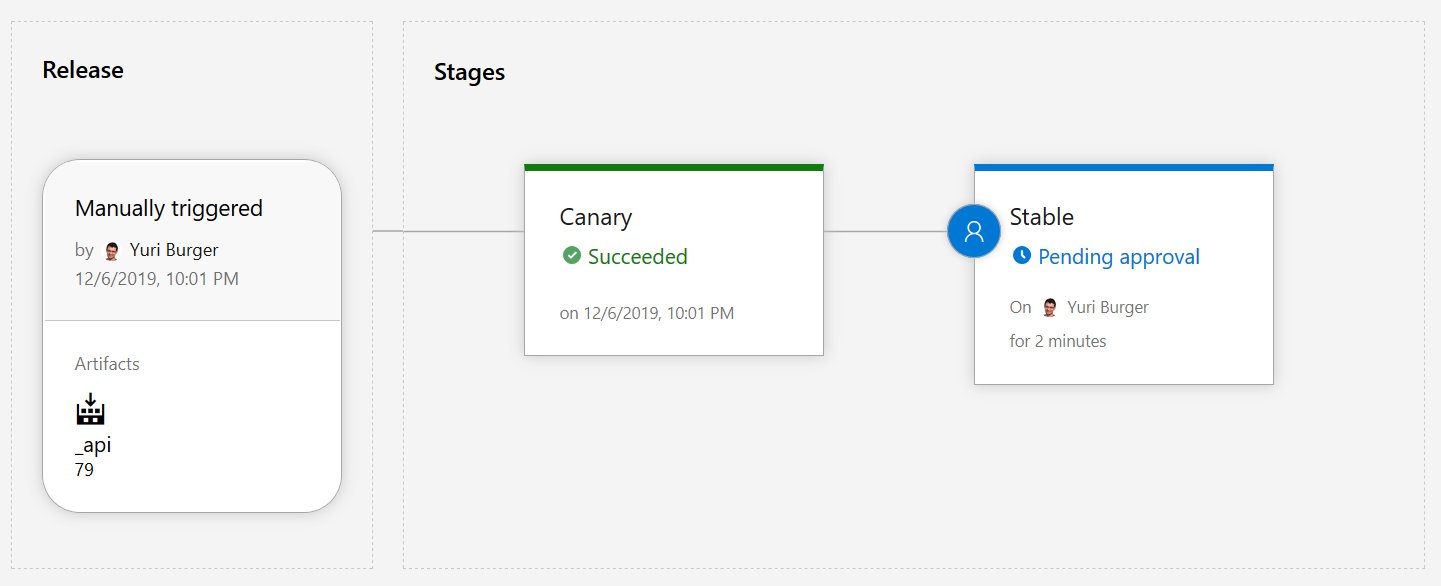

If we now create a new release, things should work as expected. The release is waiting on our Stable stage pre-deployment and in Kubernetes we have two deployments for our Api: one api-deployment and one api-canary.

If we approve the deployment, the api-deployment would be upgraded with the new image and the api-canary would be scaled down again: Canary deployment with just Azure DevOps 😉

/Y.